Category: Big win

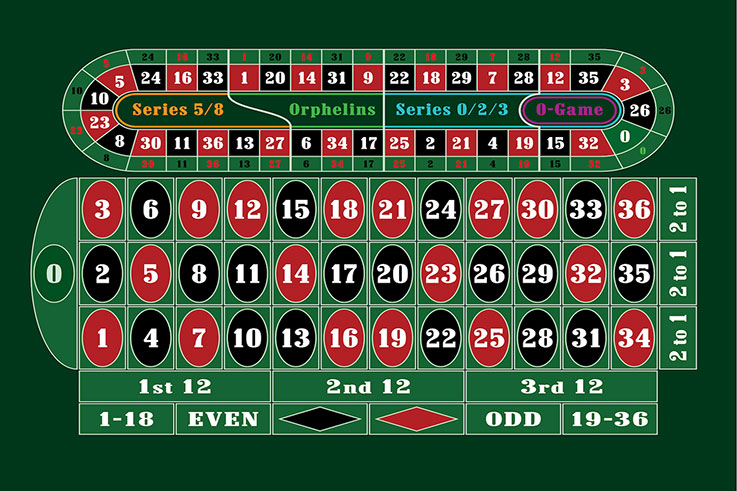

Ruleta Apuestas Ruleta

Samuzshura 08.01.2024

Variedad de Juegos Bingo

Sagal 03.01.2024

Poker bono de bienvenida

Shaktizilkree 03.01.2024

Oportunidad de ganar premios

Moogur 02.01.2024

Trofeos Proyectos Innovadores

Dokus 31.12.2023

Ofertas de Recarga Slots

Zolorn 28.12.2023

Cashback en Material de Oficina

Tojalrajas 28.12.2023

Diversión interactiva virtual

Shacage 26.12.2023

Dinero en efectivo extra

Kalar 20.12.2023

Cartones de Bingo Gratis

Yozahn 09.12.2023

Regalo Sorteo Premio

Mimuro 03.12.2023

Pagos seguros en salas de poker

Gut 03.12.2023